Research using InDro robots for real-world autonomy

By Scott Simmie

As you’re likely aware by now, InDro builds custom robots for a wide variety of clients. Many of those clients are themselves researchers, creating algorithms that push the envelope in multiple sectors.

Recently, we highlighted amazing work being carried out at the University of Alberta, where our robots are being developed as Smart Walkers – intended to assist people with partial paralysis. (It’s a really fascinating story you can find right here.)

Today, we swing the spotlight down to North Carolina State University. That’s where we find Donggun Lee, Assistant Professor in the Departments of Mechanical Engineering and Aerospace Engineering. Donggun holds a PhD in Mechanical Engineering from UC Berkely (2022), as well as a Master’s of Science in the same discipline from the Korea Advanced Institute of Science and Technology. He oversees a small number of dedicated researchers at NCSU’s Intelligent Control Lab.

“We are working on safe autonomy in various vehicle systems and in uncertain conditions,” he explains.

That work could one day lead to safer and more efficient robot deliveries and enhance the use of autonomous vehicles in agriculture.

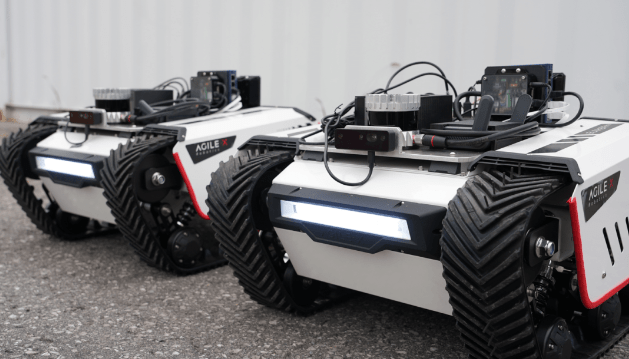

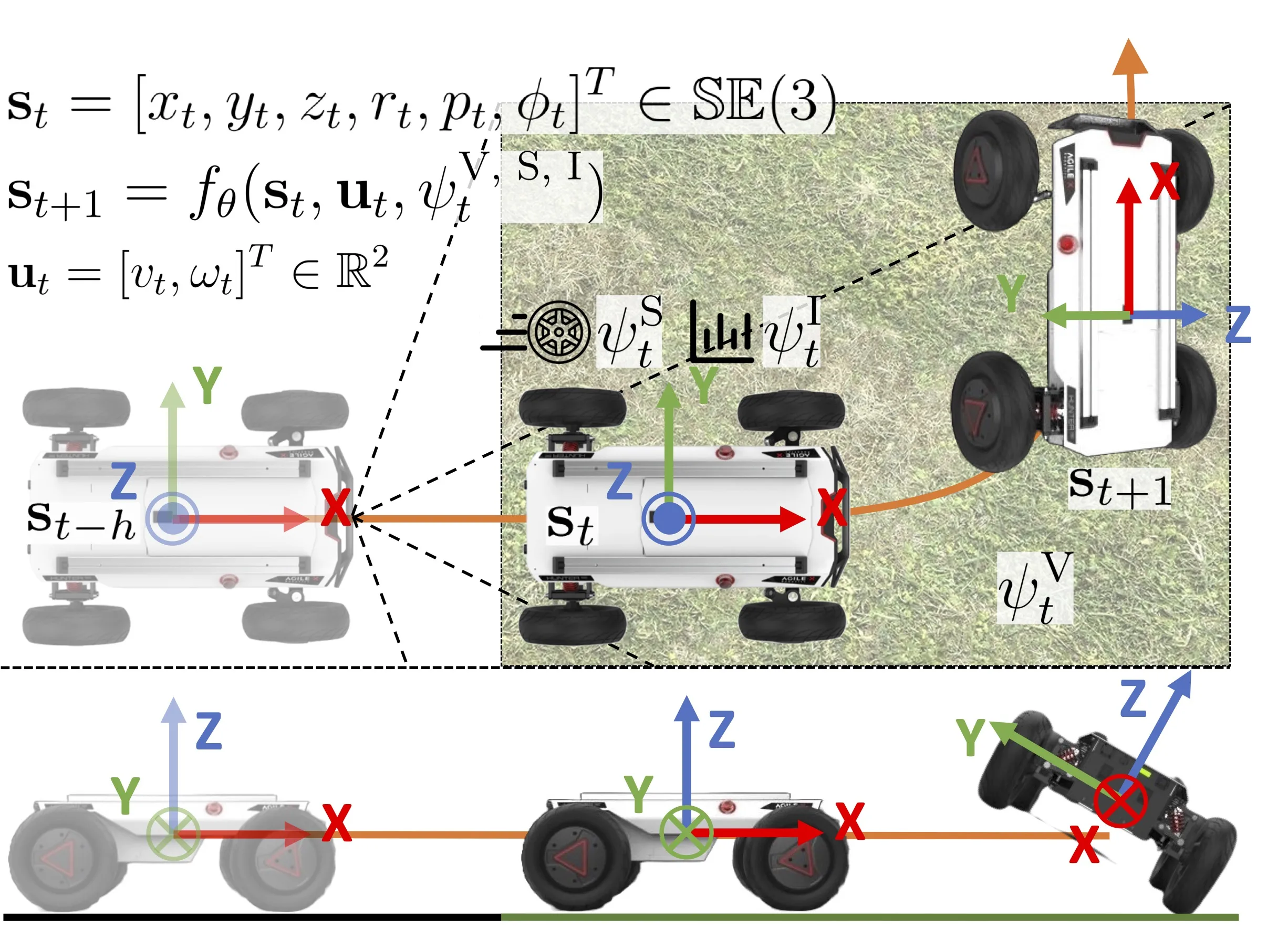

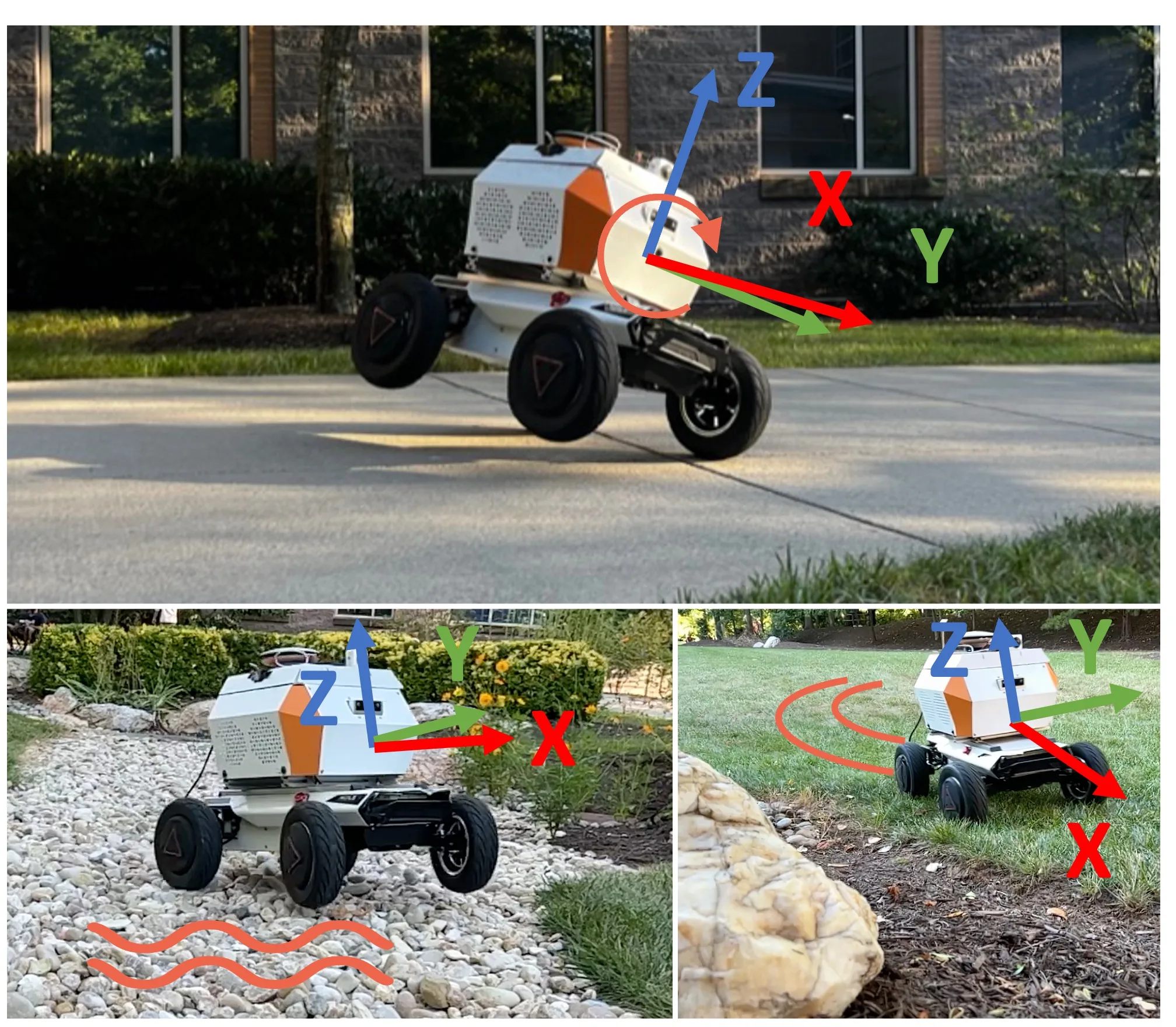

Below: Four modified AgileX Scout Mini platforms, outfitted with LiDAR, depth cameras and Commander Navigate are being used for research at NCSU. Chart below shows features of the Commander Navigate package

“UNCERTAIN” CONDITIONS

When you head out for a drive, it’s usually pretty predictable – but never certain. Maybe an oncoming vehicle will unexpectedly turn in front of you, or someone you’re following will spill a coffee on their lap and slam on their brakes. Perhaps the weather will change and you’ll face slippery conditions. As human beings, we’ve learned to respond as quickly as we can to uncertain scenarios or conditions. And, thankfully, we’re usually pretty good at it.

But what about robots? Delivery robots, for example, are already being rolled out at multiple locations in North America (and are quite widespread in China). How will they adapt to other robots on the road, or human-driven vehicles and even pedestrians? How will they adapt to slippery patches or ice or other unanticipated changes in terrain? The big picture goes far beyond obstacle avoidance – particularly if you’re also interested in efficiency. How do you ensure safe autonomy without being so careful that you slow things down?

These are the kinds of questions that intrigue Donggun Lee. And, for several years now, he has been searching for answers through research. To give you an idea of how his brain ticks, here’s the abstract from one of his co-authored IEEE papers:

Autonomous vehicles (AVs) must share the driving space with other drivers and often employ conservative motion planning strategies to ensure safety. These conservative strategies can negatively impact AV’s performance and significantly slow traffic throughput. Therefore, to avoid conservatism, we design an interaction-aware motion planner for the ego vehicle (AV) that interacts with surrounding vehicles to perform complex maneuvers in a locally optimal manner. Our planner uses a neural network-based interactive trajectory predictor and analytically integrates it with model predictive control (MPC). We solve the MPC optimization using the alternating direction method of multipliers (ADMM) and prove the algorithm’s convergence.

That gives you an idea of what turns Donggun’s crank. But with the addition of four InDro robots to his lab, he says research could explore many potential vectors.

“Any vehicle applications are okay in our group,” he explains. “We just try to develop general control and AI machine learning framework that works well in real vehicle scenarios.”

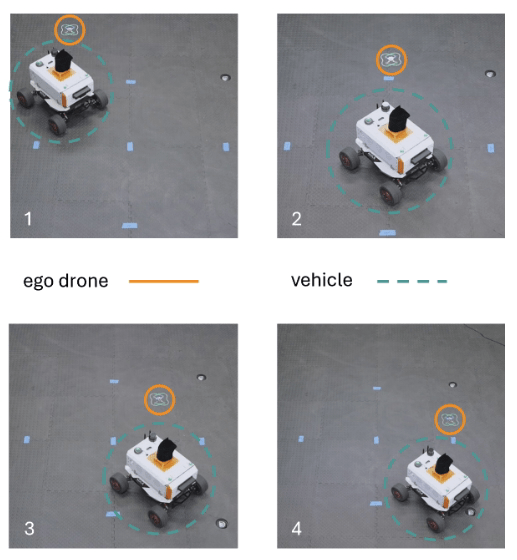

One (of many) applications that intrigues Donggun is agriculture. He’s interested in algorithms that could be used on a real farm, so that an autonomous tractor could safely follow an autonomous combine. And, in this case, they’ve done some work where they’ve programmed the Open Source Crazy Flie drone to autonomously follow the InDro robot. Despite the fact it’s a drone, Donggun says the algorithm could be useful to that agricultural work.

“You can easily replace a drone with a ground vehicle,” he explains.

And that’s not all.

“We are also currently tackling food delivery robot applications. There are a lot of uncertainties there: Humans walking around the robot, other nearby robots…How many humans will these robots interact with – and what kind of human behaviours will occur? These kinds of things are really unknown; there are no prior data.”

And so Donggun hopes to collect some.

“We want to develop some sort of AI system that will utilise the sensor information from the InDro robots in real-time. We eventually hope to be able to predict human behaviours and make decisions in real-time.”

Plus, some of Donggun’s previous research can be applied to future research. The paper cited above is a good example. In addition to the planned work on human-robot interaction, that previous research could also be applied to maximise efficiency.

“There is trade-off between safety guarantees and getting high performance. You want to get to a destination as quickly as possible and at speed while still avoiding collisions.”

He explains that the pendulum tends to swing to the caution side, where algorithms contain virtually all scenarios – including occurrences that are unlikely. By excluding some of those exceedingly rare ‘what-ifs’, he says speed and efficiency can be maximised without compromising safety.

Below: Image from Donggun’s autonomy research showing the InDro robot being followed by an Open Source Crazy Flie drone

INDRO’S TAKE

We, obviously, like to sell robots. In fact, our business depends on it.

And while we put all of our clients on an equal playing field, we have a special place in our non-robotic hearts for academic institutions doing important R&D. This is the space where breakthroughs are made.

“I really do love working with people in the research space,” says Head of R&D Sales Luke Corbeth. “We really make a concerted effort to maximise their budgets and, when possible, try to value-add with some extras. And, as with all clients, InDro backs what we sell with post-sale technical support and troubleshooting.”

The robots we delivered to NCSU were purchased under a four-year budget, and delivered last summer. Though the team is already carrying out impressive work, we know there’s much more to come and will certainly check in a year or so down the road.

In the meantime, if you’re looking for a robot or drone – whether in the R&D or Enterprise sectors – feel free to get in touch with us here. He takes pride in finding clients solutions that work.