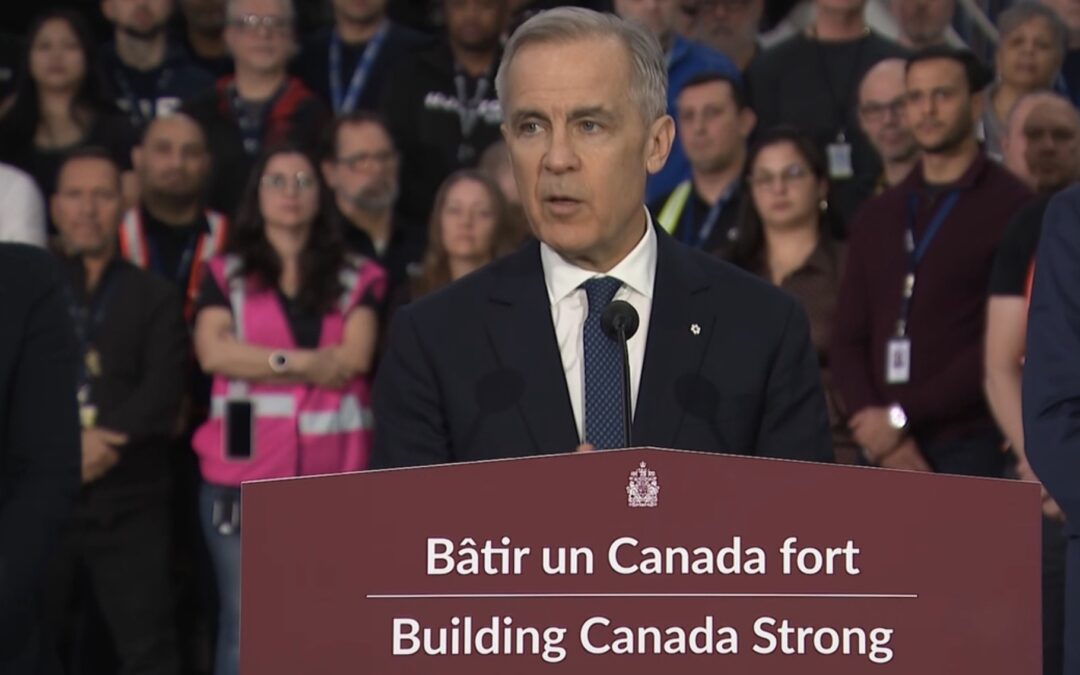

New Defence Industrial Strategy puts emphasis on Canadian tech sector

By Scott Simmie

Canada has released its long-awaited Defence Industrial Strategy. It’s a blueprint for defence and sovereignty in a rapidly-changing world – and has profound implications for Canada’s technology sector.

In a nutshell, the DIS will focus on “rebuilding, rearming, and reinvesting in the Canadian Armed Forces (CAF),” says a news release from the Prime Minister’s Office. It includes a huge emphasis on developing new dual-purpose technologies, along with massive capital investment over the next decade.

“In total, the Defence Industrial Strategy is an investment of over half a trillion dollars in Canadian security, economic prosperity, and our sovereignty,” says the release.

Historically, Canada’s procurement process has been somewhat slow and burdened with red tape. It has also relied heavily on US suppliers. The Strategy will focus on developing made-in-Canada solutions, streamlining procurement, expanding partnerships with other allies, and be overseen by the Defence Investment Agency (DIA).

“The Defence Industrial Strategy strengthens Canada’s capacity to deliver critical capabilities to the Canadian Armed Forces with greater speed, certainty, and strategic coherence. It supports the Defence Investment Agency’s mandate by enabling more agile procurement and more secure, resilient supply chains,” said Doug Guzman, CEO of the DIA.

Above: Prime Minister Mark Carney announces Canada’s Defence Industrial Strategy in Montreal on February 17, 2026. The DIS will emphasise the development of Canadian technology in areas like robotics, AI, cybersecurity and more. Below: The InDro Cortex, a dual-purpose, AI-enhanced brain for UGVs and UAVs.

CANADIAN SOLUTIONS

Over the next decade, the DIS will commit $180B in defence procurement and $290B in defence-related capital investment. Canada has traditionally spent some 75 per cent of its procurement budget with US-based suppliers, but the new DIS will see a far greater emphasis on domestic solutions and innovations, as well as partnership with other allies.

“By building, innovating, and manufacturing in Canada, we are ensuring our industries benefit directly from defence investments while supporting the modernisation of the Canadian Armed Forces. Our government is meeting the moment for Canadians by driving growth, creating jobs across the country, and ensuring Canadians benefit from a stronger, more resilient defence economy,” said The Hon. Mélanie Joly, Minister of Industry and Minister responsible for Canada Economic Development for Quebec Regions.

The DIS has five pillars. They are:

- Position Canada as a defence production leader

- Reduce barriers between government and industry

- Scale Canada’s defence/dual-use innovation – and export it to allies

- Protect Canadian jobs, supply chains, and industries

- Spearhead a coordinated national effort to strengthen Canada’s defence sector

You can find the news release and read in greater detail here.

INDUSTRY REACTION

Canada’s technology sector has been waiting for this announcement, since it was first flagged by Defence Minister David McGuinty at last September’s GCXpo event. While there are many Canadian firms solely dedicated to defence, there are many others (like InDro Robotics), which develop dual-purpose innovations – meaning they have applications both for civilian, industrial and defence applications. For those companies, the reduction of red tape and an increased flow of funding means significant opportunities.

Canada’s Dominion Dynamics, which is building a “a dual-use, persistent, Arctic sensing network designed to serve both military and civilian purposes,” issued a news release welcoming the announcement.

“In areas such as digital systems, autonomous platforms, sensors, aerospace, advanced manufacturing, and secure sustainment, Canada has real strengths,” it states. “Choosing to build here first will strengthen strategic autonomy, create high-value jobs, and ensure that we retain control of critical IP and long-term capability. The Partner component is equally pragmatic. Canada cannot and should not attempt to do everything alone. Structured partnerships with trusted allies—where technology and intellectual property are genuinely co-developed—will make us stronger and more resilient.”

Below: Global News coverage of the DIS announcement

INDRO’S TAKE

InDro is no stranger to developing dual-purpose technologies that can be put to use for both commercial and defensive purposes. One of many ongoing projects is a partnership with Montreal-based CHAAC Technologies for the AI detection and elimination of a particularly pernicious air-dropped land mine. Our InDro Cortex is a next-gen brain box capable of transforming any ground or aerial platform – even military vehicles – into remotely tele-operated or autonomous devices, complete with AI and advanced Machine Vision capabilities.

“The new Defence Industrial Strategy is a bold and important step forward for Canada in an ever-shifting geopolitical world,” says InDro Founder and CEO Philip Reece. “And while this is welcome news for the technology sector in Canada, it is also – and more importantly – a strategic and smart move for Canada’s future defence and sovereignty capabilities.”

We anticipate there will be much more to tell you as the strategy is implemented. Stay tuned.