CBC Interviews InDro Founder/CEO Philip Reece on the new Federal budget

By Scott Simmie

Canada’s new budget was unveiled Monday, November 4.

Delivered by Finance Minister François-Philippe Champagne, there was a lot of focus on technology and defence – both for global competitiveness and to reflect a changing geopolitical world. CBC carried extensive live coverage of the event, which included an interview on the program The House with InDro Founder and CEO Philip Reece on a panel.

The headline for the tech sector? A massive investment in defence spending, which includes dual-purpose technologies, meaning they can be utilised both for defence and industrial/civilian purposes. And the money? It’s big, including $81.8B over five years to rebuild, rearm, and invest in the Canadian Armed Forces.

Here’s a breakdown:

- $17.9 billion over five years to expand Canada’s military capabilities including investments in additional logistics utility, light utility, and armoured vehicles, counter-drone and DRONE long-range capabilities, and domestic production, among other investments.

- $6.6 billion to support the Defence Industrial Strategy

- $6.2 billion over five years to expand Canada’s defence partnerships, including military assistance to Ukraine.

The Defence Industrial Strategy is new – and Canada’s first-ever such strategy. Details will be released in the coming months. But during the recent GCXpo in Ottawa, Defence Minister David McGuinty explained that it will lean heavily on Canada’s technology innovators.

“This is what I do know, and for sure: I know that at the heart of the strategy is you. The innovators, the investors, the risk-takers, the entrepreneurs, and the startups. You’re going to help us develop the dual-use technologies that are going to shape the future of defence and security,” he said.

Below: Dual-purpose technologies, such as our Sentinal inspection robot, could play a significant role in Canada’s Defence Industrial Strategy.

CANADA STRONG

It was clear from Finance Minister François-Philippe Champagne’s opening remarks that this would be a very different kind of budget.

“The world is undergoing a series of fundamental shifts at a speed, scale, and scope not seen since the fall of the Berlin Wall,” he said.

“The rules-based international order and the trading system that powered Canada’s prosperity for decades are being reshaped – threatening our sovereignty, our prosperity, and our values….Budget 2025 represents the largest defence investment in decades.”

And perhaps most revelant for this sector?

“With our new Defence Investment Agency and Defence Industrial Strategy, we will build up Canada’s defence industry – strengthening Canadian businesses and supporting Canadian workers…We will further build our security and defence capabilities, right here at home – creating new jobs for our engineers, technicians and scientists in sectors such as aerospace, shipbuilding, cybersecurity, and AI.”

In many ways, the budget signified that Canada is at a critical turning point. And while it wasn’t all focused on defence and innovation, there was a striking emphasis on these areas. And that means challenges – and opportunities – right across the entire technology sector.

“This is about more than one company; it’s about building a Canadian defence ecosystem,” says Reece.

INNOVATION IN A CHANGING WORLD

The world is changing in unprecedented ways. The stability of geopolitics we’ve traditionally enjoyed is now much more uncertain. Significant conflicts, using newer technologies, are in the news every day. So it was no surprise the Canada Strong budget emphasized this country needs to advance its capabilities.

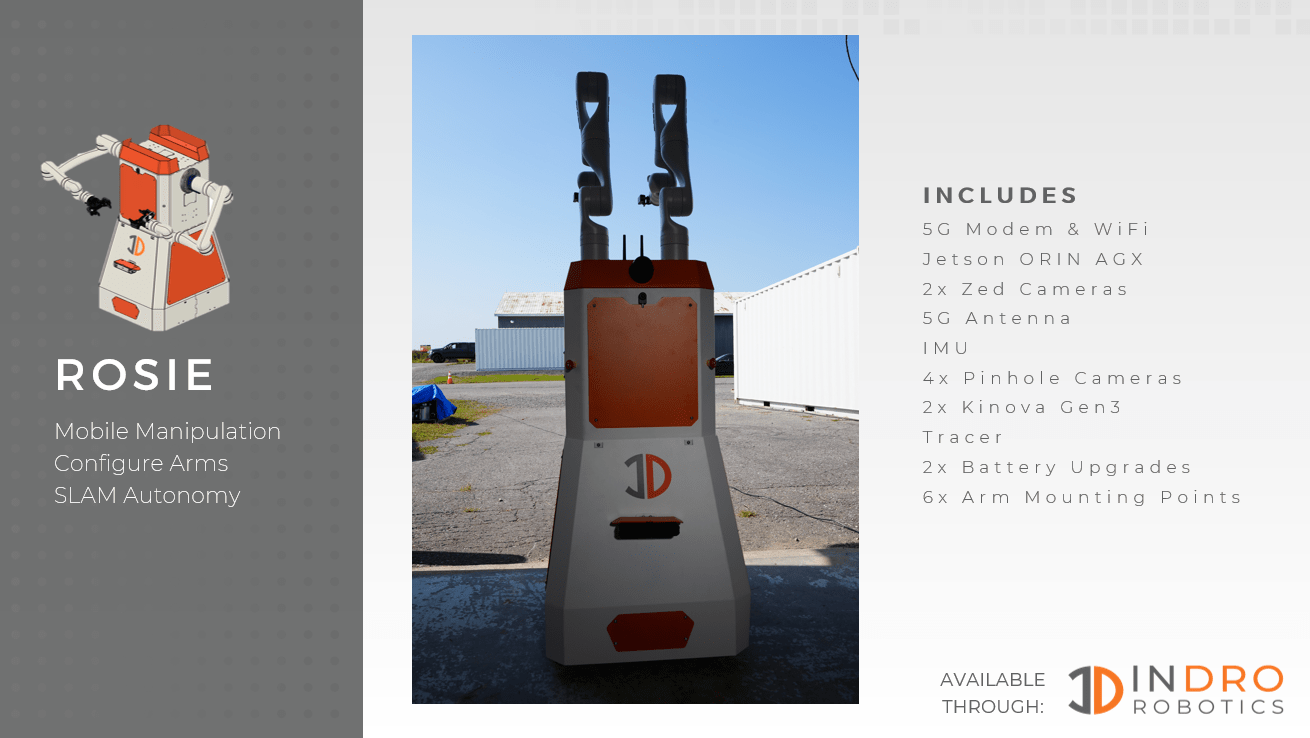

Following the budget, CBC’s The House interviewed a panel that included InDro Robotics Founder/CEO Philip Reece for his reaction from the technology sector. He started by touching on the budget’s potential to help grow SMEs, which are really the backbone of bringing new technologies to the fore.

“This budget is a strong start for that,” he said. “Now…we need the Canadian government to follow through and allow innovators like InDro – and the many others that are out there – to really compete and become those global companies that we deserve to be.”

Part of that plan will be contained in the forthcoming Defence Industrial Strategy, which will be released in the months to come. As previously hinted by Canada’s Minister of Defence, this strategy will rely heavily on technology entrepreneurs ranging from startups and SMEs through to major corporations.

InDro Robotics invents and manufacture technologies that have already assisted the Department of Defence and have carried out work directly for the Ministry. (Most recently, InDro and partner CHAAC Technologies carried out a demonstration for the DoD of an AI land mine detection project that fuses drones, ground robots, and a neural network.)

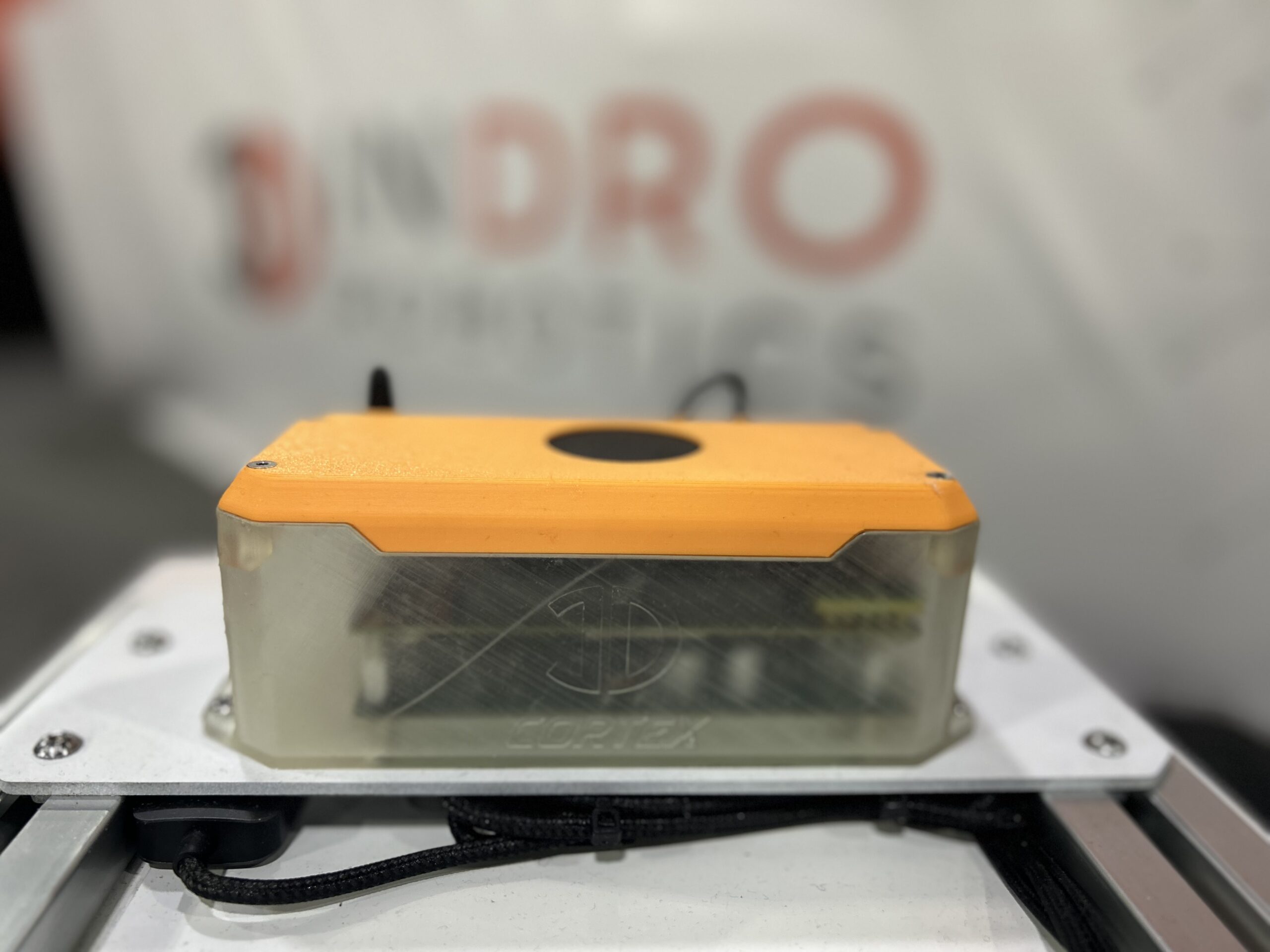

Devices like our dual-purpose Sentinel inspection robot can be put to work in the field for reconnaissance, and our InDro Cortex greatly enhances the capabilities of a wide range of existing devices – including military vehicles and drones. We also have extensive expertise in customised drone and Counter-UAS technologies.

“It’s the same kind of technology now that can be rapidly swapped over to defence,” Reece told The House. “And we have seen that sort of build over the last couple of years, but it needs to build faster.

“InDro Robotics is ready to deliver on Canada’s defence vision. We have the tech, the talent, and the ambition, now we need a clear path from government that allows us to grow and meet the moment,” says Reece.

Below: Philip’s interview on CBC’s The House, followed by an image of our Cortex – a dual-purpose InDro innovation that can be used for defence, industrial and civilian purposes

INDRO’S TAKE

It is indeed a changing world. And we’re pleased (and relieved) to see the Government of Canada recognise the important role that technological innovation will play in our future sovereignty and security. InDro Robotics, and many other tech companies in this country, are ready to answer this call.

“The Canada Strong budget marks a pivotal moment for Canada’s defence and economic resilience,” says InDro Robotics Founder/CEO Philip Reece.

“InDro Robotics welcomes the increased investment and urges the government to now deliver a clear strategy to help Canadian businesses grow into true global leaders, capable of supplying the men and women of the Canadian Armed Forces with the tools and equipment they need and supporting Canada’s trade diversification goals. It is indeed a challenge, and we are up for it.”

We look forward to the forthcoming details of Canada’s Defence Industrial Strategy, and will update you at that time.