Still a long road to fully autonomous passenger cars

By Scott Simmie

We hear a lot about self-driving cars – and that’s understandable.

There are a growing number of Teslas on the roads, with many owners testing the latest beta versions of FSD (Full Self-Driving) software. The latest release allows for automated driving on both highways and in cities – but the driver still must be ready to intervene and take control at all times. Genesis, Hyundai and Kia electric vehicles can actively steer, brake and accelerate on highways while the driver’s hands remain on the wheel. Ford EVs offer something known as BlueCruise, a hands-free mode that can be engaged on specific, approved highways in Canada and the US. Other manufacturers, such as Honda, BMW and Mercedes, are also in the driver-assist game.

So a growing number of manufacturers offer something that’s on the path to autonomy. But are there truly autonomous vehicles intended to transport humans on our roads? If not, how long will it take until there are?

Good question. And it was one of several explored during a panel on autonomy (and associated myths) at the fifth annual CAV Canada conference, which took place in Ottawa December 5. InDro’s own Head of Robotic Solutions (and Tesla owner) Peter King joined other experts in the field on the panel.

Levels of autonomy

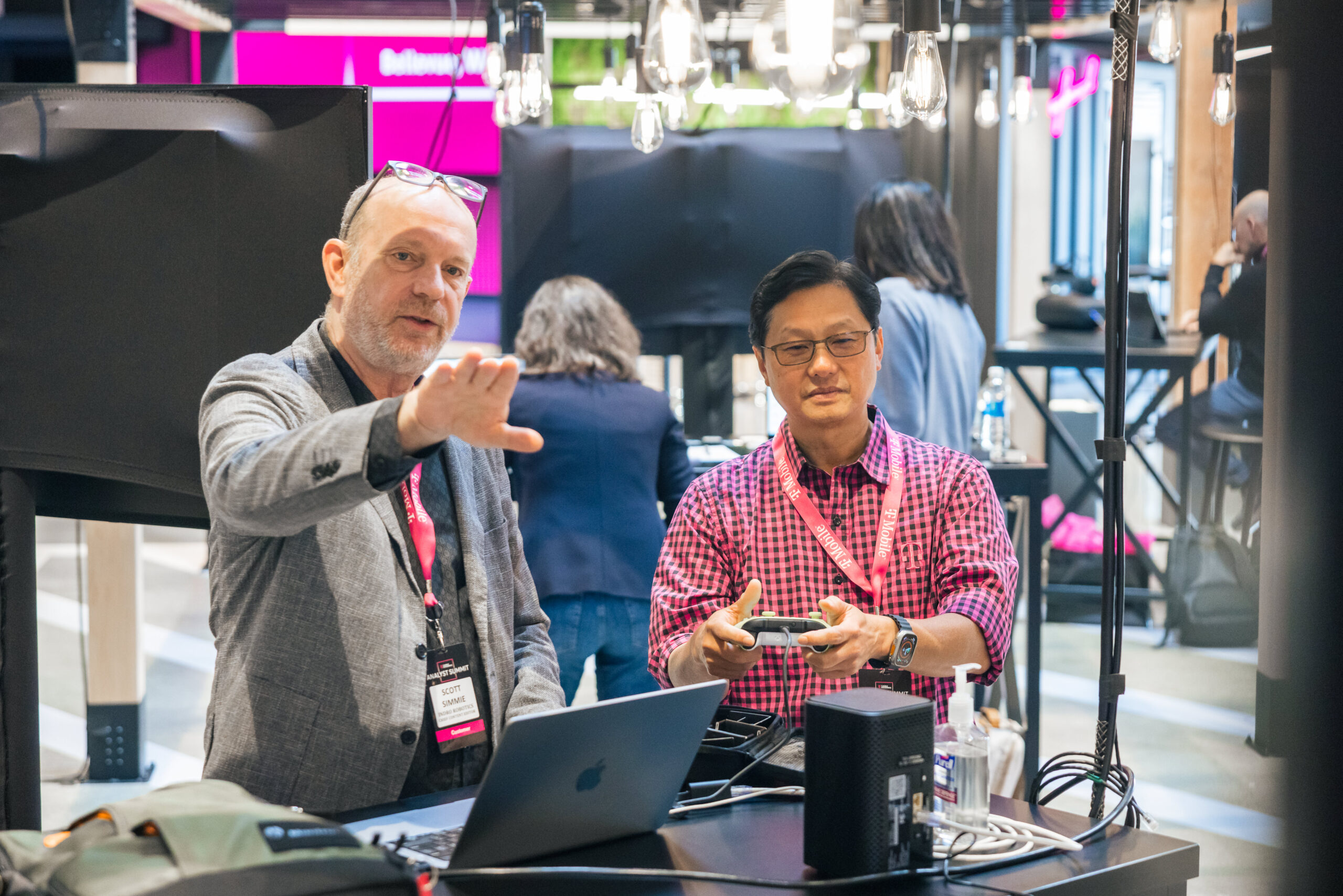

As the panel got underway, there were plenty of acronyms being thrown around. The most common were L2 and L3, standing for Level 2 and Level 3 on a scale of autonomy that ranges from zero to five.

This scale was created by the Society of Automotive Engineers as a reference classification system for motor vehicles. At Level 0, there is no automation whatsoever, and all aspects of driving require human input. Think of your standard car, where you basically have to do everything. Level 0 cars can have some assistive features such as stability control, collision warning and automatic emergency braking. But because none of those features are considered to actually help drive the car, such vehicles remain in Level 0.

Level 5 is a fully autonomous vehicle capable of driving at any time of the day or night and in any conditions, ranging from a sunny day with dry pavement through to a raging blizzard or even a hurricane (when, arguably, no one should be driving anyway). The driver does not need to do anything other than input a destination, and is free to watch a movie or even sleep during the voyage. In fact, a Level 5 vehicle would not need a steering wheel, gas pedal, or other standard manual controls. It would also be capable of responding in an emergency situation completely on its own.

Currently, the vast majority of cars on the road in North America are Level 0. And even the most advanced Tesla would be considered Level 2. There is a Level 3 vehicle on the roads in Japan, but there are currently (at least to the best of our knowledge and research), no Level 3 vehicles in the US or Canada.

As consumer research and data analytics firm J.D. Power describes it:

“It is worth repeating and emphasizing the following: As of May 2021, no vehicles sold in the U.S. market have a Level 3, Level 4, or Level 5 automated driving system. All of them require an alert driver sitting in the driver’s seat, ready to take control at any time. If you believe otherwise, you are mistaken, and it could cost you your life, the life of someone you love, or the life of an innocent bystander.”

To get a better picture of these various levels of autonomy, take a look at this graphic produced by the Society of Automotive Engineers International.

Now we’ve got some context…

So let’s hear what the experts have to say.

The consensus, as you might have guessed, is that we’re nowhere near the elusive goal of a Level 5 passenger vehicle.

“Ten years ago, we were all promised we’d be in autonomous vehicles by now,” said panel moderator Michelle Gee, Business Development and Strategy Director with extensive experience in the automotive and aerospace sectors. Gee then asked panelists for their own predictions as to when the Level 4 or 5 vehicles would truly arrive.

“I think we’re still probably about seven-plus years away,” offered Colin Singh Dhillon, CTO with the Automotive Parts Manufacturers’ Association.

“But I’d also like to say, it’s not just about the form of mobility, you have to make sure your infrastructure is also smart as well. So if we’re all in a bit of a rush to get there, then I think we also have to make sure we’re taking infrastructure along with us.”

It’s an important point.

Vehicles on the path to autonomy currently have to operate within an infrastructure originally built for human beings operating Level 0 vehicles. Such vehicles, as they move up progressive levels of autonomy, must be able to scan and interpret signage, traffic lights, understand weather and traction conditions – and much more.

Embedding smart technologies along urban streets and even on highways could help enable functionalities and streamline data processing in future. If a Level 4 or 5 vehicle ‘knew’ there was no traffic coming at an upcoming intersection, there would be no need to stop. In fact, if *all* vehicles were Level 4 or above, smart infrastructure could fully negate the need for traffic lights and road signs entirely.

Seven to 10 years?

If that’s truly the reality, why is there so much talk about autonomous cars right now?

The answer, it was suggested, is in commonly used – but misleading – language. The term “self-driving” has become commonplace, even when referring solely to the ability of a vehicle to maintain speed and lane position on the highway. Tesla refers to its beta software as “Full Self-Driving.” And when consumers hear that, they think autonomy – even though such vehicles are only Level 2 on the autonomy scale. So some education around langage may be in order, suggested some panelists.

“It’s the visual of the word ‘self-driving’ – which somehow means: ‘Oh, it’s autonomous.’ But it isn’t,” explained Dhillon. “…maybe make automakers change those terms. If that was ‘driver-assisted driving,’ then I don’t think people would sleeping at the wheel whilst they’re on the highway.”

One panelist suggested looking ahead to Level 5 may be impractical – and even unnecessary. Recall that Level 5 means a vehicle capable of operating in all conditions, including weather events like hurricanes, where the vast majority of people would not even attempt to drive.

“It’s not safe for a human to be out in those conditions…I think we should be honing down on the ‘must-haves,’ offered Selika Josaih Talbott, a strategic advisor known for her thoughtful takes on autonomy, EVs and mobility.

“Can it move safely within communities in the most generalised conditions? And I think we’re clearly getting there. I don’t even know that it’s (Level 5) something we need to get to, so I’d rather concentrate on Level 3 and Level 4 at this point.”

InDro’s Peter King agrees that Level 5 isn’t coming anytime soon.

“I believe the technology will be ready within the next 10 years,” he says. “But I believe it’ll take 30-40 years before we see widespread adoption due to necessary changes required in infrastructure, regulation and consumer buy-in.”

And that’s not all.

“A go-to-market strategy for Level 5 autonomy is a monumental task. It involves significant investments in technology and infrastructure – and needs to be done so in collaboration with regulators while also factoring in safety and trust from consumers with a business model that is attainable for the masses.”

What about robots?

Specifically, what about Uncrewed Ground Vehicles like InDro’s Sentinel inspection robot, designed for monitoring remote facilities like electrical substations and solar farms? Sentinel is currently teleoperated over 4G and 5G networks with a human controlling the robot’s actions and monitoring its data output.

Yet regular readers will also know we recently announced InDro Autonomy, a forthcoming software package we said will allow Sentinel and other ROS2 (Robot Operating System) machines to carry out autonomous missions.

Were we – perhaps like some automakers – overstating things?

“The six levels of autonomy put together by the SAE are meant to apply to motor vehicles that carry humans,” explains Arron Griffiths, InDro’s lead engineer. In fact, there’s a separate categorization for UGVs.

The American Society for Testing and Materials (ASTM), which creates standards, describes those tiers as follows: “Currently, an A-UGV can be at one of three autonomy levels: automatic, automated, or autonomous. Vehicles operating on the first two levels (automatic and automated) are referred to as automatic guided vehicles (AGVs), while those on the third are called mobile robots.”

“With uncrewed robots like Sentinel, we like to think of autonomy as requiring minimal human intervention over time,” explains Griffiths. “Because Sentinel can auto-dock for wireless recharging in between missions, we believe it could go for weeks – quite likely even longer – without human intervention, regardless of whether that intervention is in-person or virtual,” he says.

“The other thing to consider is that these remote ground robots, in general, don’t have to cope with the myriad of inputs and potential dangers that an autonomous vehicle driving in a city must contend with. Nearly all of our UGV ground deployments are in remote and fenced-in facilities – with no people or other vehicles around.”

So yes, given that InDro’s Sentinel will be able to operate independently – or with minimal human intervention spread over long periods – we are comfortable with saying that machine will soon be autonomous. It will even be smart enough to figure out shortcuts over time that might make its data missions more efficient.

It won’t have the capabilities of that elusive Level 5 – but it will get the job done.

InDro’s take

Autonomy isn’t easy. Trying to create a fully autonomous vehicle that can safely transport a human (and drive more safely than a human in all conditions), is a daunting task. We expect Level 5 passenger vehicles will come, but there’s still a long road ahead.

Things are easier when it comes to Uncrewed Ground Vehicles collecting data in remote locations (which is, arguably, where they’re needed most). They don’t have to deal with urban infrastructure, unpredictable drivers, reading and interpreting signage, etc.

That doesn’t mean it’s easy, of course – but it is doable.

And we’re doing it. Stay tuned for the Q1 release of InDro Autonomy.