Boston University uses AgileX LIMO for research

By Scott Simmie

What will the Smart Cities of the future look like?

More specifically, how will the many anticipated devices operate – and cooperate – in this coming world? How will connected and autonomous vehicles interact to ensure the greatest efficiency with minimal risk? How might ground robots and drones fit into this scheme? And how can researchers even test algorithms without a fleet of connected vehicles, which would obviously incur great costs and require huge testing areas?

In the case of Boston University, the answer is in a small but powerful robot called LIMO.

A VERSATILE PLATFORM

We spoke with three engineers from Boston University, each of whom are working with the AgileX LIMO platform. Before we get into an overview of their research, it’s worth taking a look at LIMO itself. Here’s how the manufacturer describes the product:

“LIMO is an innovative multi-modal, compact, and customizable mobile robot with Al modules and open-source ROS (Robot Operating System) packages, which enables education, researchers, enthusiasts to program and develop Al robots easier. The LIMO has four steering modes including Omni-directional steering, tracked steering, Ackerman and four-wheel differential, in line with strong perception sensors and Nvidia Jetson Nano, making it a better platform to develop more indoor and outdoor industrial applications while learning ROS.”

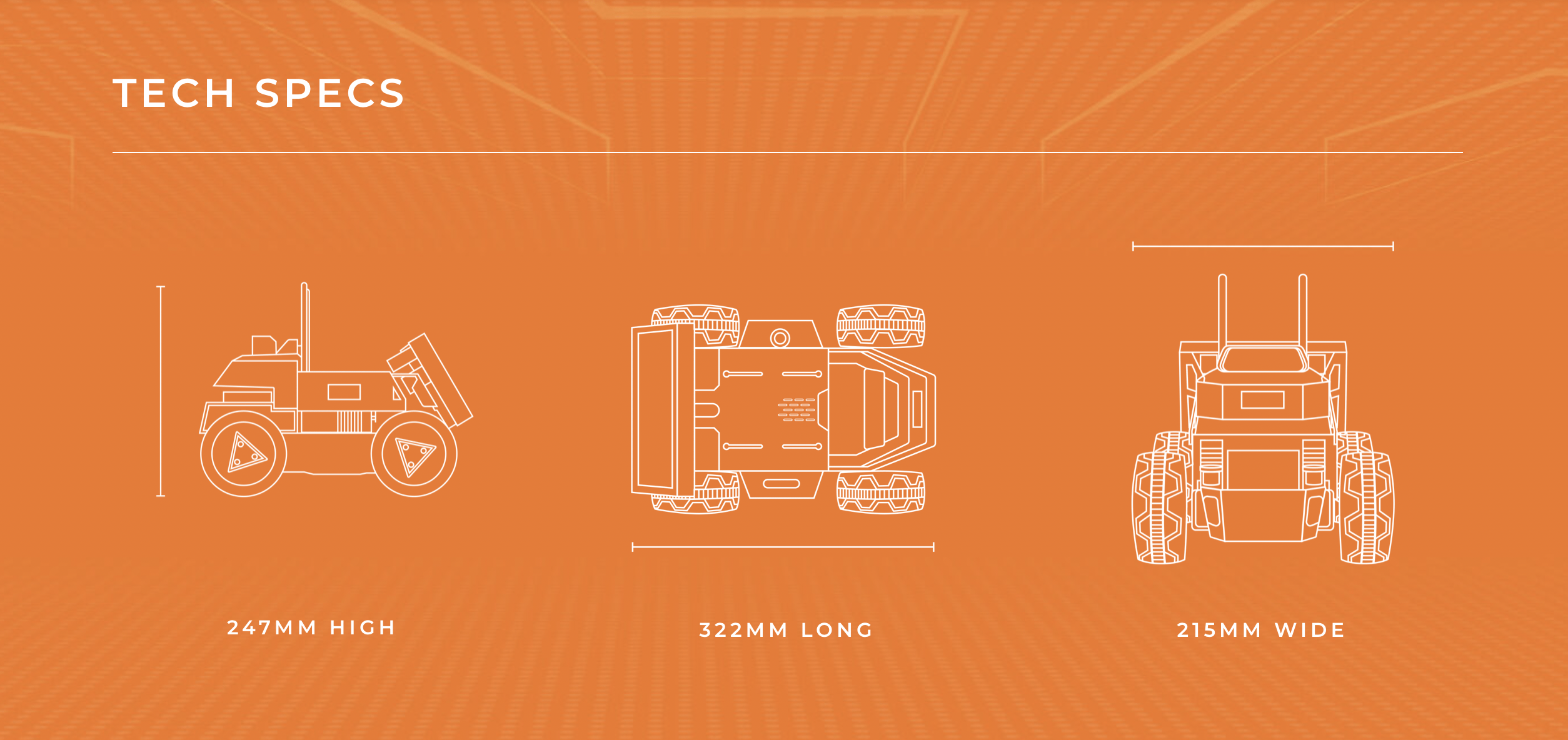

And it all comes in a pretty compact package:

CAPABLE

While its user-friendly design is suitable for even enthusiasts and students to operate, its sophisticated capabilities mean it’s also perfect for high-end research. (You can find full specs on the product here.)

LIMO can detect objects in its surroundings and avoid them, and is even capable of Simultaneous Localisation and Mapping (SLAM). With a runtime of 40 minutes, extended missions are possible.

Here’s a look at LIMO in action, which provides a pretty good overview of its capabilities:

HIGH-LEVEL RESEARCH

We spoke with three people from Boston University, each of whom are using LIMO for different purposes. The three are:

- Christos Cassandras, Distinguished Professor of Engineering, Head of the Division of Systems Engineering, and Professor of Electrical and Computer Engineering

- Alyssa Pierson, Assistant Professor, Department of Mechanical Engineering

- Mela Coffey, Graduate Research Assistant and PhD candidate under Alyssa Pierson in Mechanical Engineering

Cassandras is focused on groups of robots working cooperatively (and sometimes uncooperatively), called Multi-Agent Systems. If you think ahead to a connected Smart City of the future, the cars on the road would be Connected Automated Vehicles (CAVs). They would all be aware of each other and make autonomous decisions that ensure both safety and efficiency. Far enough down the road, today’s traffic signals, stop signs and more would likely not be needed because the vehicles are collectively part of a network.

“These vehicles become nodes in an Internet in which the vehicles talk to each other,” says Cassandras.

“They exchange information and so, ideally cooperatively, they can improve metrics of congestion, of energy, of pollution, of comfort, of safety – perhaps safety being predominant.”

In the video below, you’ll see LIMOs driving cooperatively, calculating in real-time the most efficient way to merge:

MAKING THE TRANSITION

But as we head toward this future, there will be a blend of regular cars and autonomous vehicles until the transition to automated driving is complete. And that period of transition will create its own challenges, which also interest Cassandras.

“So typically what we expect within the next, let’s say five to 10 years, is a mixture of the smart connected autonomous vehicles and the regular vehicles that we typically refer to as Human Driven Vehicles or HDVs. So the idea is: How can we get these teams of autonomous agents to work together?”

Obviously, testing this in a real-world scenario – with a blend of autonomous and HDVs – would be hugely expensive and require closed roads, etc. Enter LIMO – or, more accurately, a fleet of LIMOs.

“Since I can’t use dozens of real vehicles, I would like to use dozens of small robots that can be thought of as these autonomous vehicles, (which can) talk to each other, cooperate,” he says. “But also sometimes they don’t really cooperate if some of them are the HDVs. So what we are doing in our Boston University Robotics Lab, of which Alyssa and I are members along with several other colleagues, is we deploy these LIMOs that we have acquired as teams of autonomous vehicles.”

And what kinds of scenarios are they looking at? Well, consider how things work now. Cars stop at red lights, idle, and then quickly accelerate when the light turns green. This is hugely inefficient and adds to pollution. Wouldn’t it be better if there were no traffic lights at all, and vehicles could safely navigate around one another at peak efficiency? Well, of course. And that’s the kind of work Cassandras is conducting with a fleet of LIMOs at the Boston University Robotics Lab.

He is also one of the authors of a scientific paper that will be presented at the 7th IEEE Conference on Control Technology and Applications (CCTA) in August. That paper is entitled: “Optimal Control of Connected Automated Vehicles with Event-Triggered Control Barrier Functions: a Test Bed for Safe Optimal Merging.”

RASTIC

This fall, Boston University will open a new facility called the Robotics & Autonomous Systems Teaching & Innovation Center (RASTIC). There will be an area dedicated to mimicking a Smart City, with large numbers of LIMOs driving cooperatively (and sometimes uncooperatively). Cassandras says he intends to use a ceiling-based projector to create a simulated network of roads and obstacles on the floor for LIMOs to navigate.

“I envision about 20 to 30 LIMOs moving around, communicating – trying to get from Point A to Point B without hitting each other, as fast as possible, making turns, stopping at traffic lights if there are traffic lights, and so on… That’s the the grand vision. And RASTIC is intended for teaching as opposed to research.”

Other research using LIMOs will continue, meanwhile, at the existing Boston University Robotics Lab.

The following video, and this link, help explain RASTIC – and why this will be a significant facility for the Engineering Department.

THE IOT

So that’s one part of the resesarch using LIMOs. But wait, there’s more!

Assistant Professor Alyssa Pierson is also interested in Multi Agent Systems. But her work focusses less on the autonomous vehicle side of things, and more on general small-scale autonomous platforms. Think of delivery robots, drones, or even some other autonomous sensor platform making its way through the world.

“So thinking about instead of saying that two agents are inherently cooperative or non cooperative, what are all those nuanced interactions in between?” says Pierson.

“What does it mean if robots and a team have reputation that they can share among other robots? How does that change the underlying interactions? And we’re looking at these things, what reputation might mean, for instance, in perhaps robot delivery problems. How do they decide how to share resources, how to deliver supplies? The LIMOs provide a hardware platform to demonstrate these new algorithms that we propose.”

Graduate Research Assistant Mela Coffey is involved with this work, as well as some of her own as a PhD candidate.

Below: LIMO navigates obstacles, including dogs

THE HUMAN FACTOR

Both Coffey and Pierson are also interested in how humans play a role in this world. And, more specifically, how robots might gather data that could assist humans in their own decision-making while tele-operating robots. Perhaps the robots might suggest that the human operator choose a more efficient route, for example.

It’s serious research, and a scientific paper on it has just been accepted for the upcoming International Conference on Intelligent Robots and Systems, IROS.

Coffey says the LIMO is perfect for this kind of research because it offers a hassle-free platform.

“From the start, they’ve been super easy to set up,” she says. “It’s nice just being able to take the robots right out of the box and there’s very minimal setup that we have to do. As roboticists, we don’t want to focus on the hardware – we want to just put our algorithms on the robot and show that our algorithms can work in real-time on these robots.”

Boston University has purchased a significant fleet of LIMOs from InDro.

“I think roughly total is about 30,” says Cassandras. “One of the things I was unhappy about with other small robots that we’ve worked with is that they would break a lot. That’s to be expected – if you have 10 and a couple break after a few months, that’s OK. But if you have 10 and six break, that’s not good. The LIMOs have been very reliable.”

INDRO’S TAKE

Account Executive Luke Corbeth is the person who put these LIMOs into the hands of Boston University. He says it’s truly a perfect platform for such research.

“Since LIMO is multi-modal, researchers can test their algorithms with a differential, Ackerman, omnidirectional or tracked system without needing to purchase 4 separate units,” he says.

“The LIMO comes equipped with all the hardware needed for multi-robot teaming. It’s rare to find such a versatile and budget-friendly platform with the compute, connectivity, cameras and sensors that are needed to make this type of project possible.”

Corbeth deals with the majority of InDro clients – and is passionate about his work, and the work people like the Boston University team are doing.

“I genuinely believe in a future where robots make our lives easier. My clients are the ones pushing us towards that future, so it’s satisfying to enable this sort of work. Above and beyond the research aspect, I know the students of my clients are learning a lot from using this robot, so it’s gratifying to know we’re assisting the next generation of innovators as well.”

And the best part? Priced at under $3000, LIMO is affordable, even for clients with limited budgets.

Interested? Learn more about LIMO and book a demo here.